Tic tac toe

Lab setup

First, make sure you have completed the initial setup.

If you are part of a course

-

Open Terminal. Run the update command to make sure you have the latest code.

$ mwc update -

Move to this lab's directory.

$ cd ~/Desktop/making_with_code/mwc1/unit3/lab_tic_tac_toe

If you are working on your own

-

Move to your MWC directory.

$ cd ~/Desktop/making_with_code -

Get a copy of this lab's materials.

git clone https://git.makingwithcode.org/mwc/lab_tic_tac_toe.git

In this lab, we will continue to explore how object-oriented programming can model a problem. In this case, we'll implement the game of tic-tac-toe. To take an OOP approach, the first question we will ask is, "Which objects do we need?" We will definitely need a class for the game and another for a player (there are two players, but each can be an instance of the same class). However, we will actually represent the game using two models: one for the game logic and one for its view. The game will encode the rules of tic-tac-toe: how the game starts, what each player is allowed to do, how the game ends, and who wins. The view will take care of user interaction, including showing the game to the players and getting their actions.

The game, its view, and the players will interact using state, or a minimal data structure keeping track of what's going on in a game. Think of game state as a saved game, keeping track of everything necessary to restore a game. For tic-tac-toe, state will consist of a dict like this:

{

"board": ['-', '-', '-', '-', '-', '-', '-', '-', '-'],

"player_x": True,

}The board is a list containing nine single-character strings, keeping track of what's in each space

of the board. A space with - is empty, otherwise a space may have X or O. The second key,

player_x, keeps track of whether or not it's player X's turn.

We will work with the following classes:

ttt.game.TTTGame: Keeps track of game state.ttt.view.TTTView: Handles user interaction.ttt.player.TTTHumanPlayer: Represents a human player.ttt.player.TTTComputerPlayer: Represents a computer player.

Test out the code

Navigate to the lab folder and enter a Poetry shell.

💻

Run python play_ttt.py. As you play, think about how this game works.

You may notice that the game has a pretty unsatisfying ending.

Read the code

Open this project's code by running code .

Read every line of code in play_ttt.py, as well as all the modules in ttt.

The goal is to trace the execution of the program, following how a method

in one class calls a method in another.

player0 = TTTHumanPlayer("Player 1")

player1 = TTTHumanPlayer("Player 2")

game = TTTGame()

view = TTTView(player0, player1)

state = game.get_initial_state()

view.greet()

while not game.is_over(state):

action = view.get_action(state)

state = game.get_next_state(state, action)

view.conclude(state)In order to understand this code, you need to understand not just what each line does, but how the objects and methods interact to produce the game.

Improve the game

Fix TTTGame.check_winner. Right now, the game always reports that nobody has won the game.

The game therefore doesn't end until all the spaces are filled, even if a player has three-in-a-row.

The reason for this is that TTTGame.is_over tries to check three

possible cases for why the game might be over:

- The board is full (using

TTTGame.board_is_full) - Player

Xhas three in a row (usingTTTGame.check_winner) - Player

Ohas three in a row (usingTTTGame.check_winner)

Unfortunately, TTTGame.check_winner always reports that the player has not won. This is where you come in.

Programming an intelligent computer player

Try playing with a computer player. In play_ttt.py, replace one of the TTTHumanPlayers with

a TTTComputerPlayer. Now you can play against a computer opponent:

player0 = TTTHumanPlayer("Player 1")

player1 = TTTComputerPlayer("Robot")

game = TTTGame()

view = TTTView(player0, player1)Now when you play, the second player is a computer and chooses moves automatically. Unfortunately,

the computer plays terribly. The reason for this is that ttt.player.TTTComputerPlayer uses

a strategy.random_strategy.RandomStrategy which is as bad as it sounds. Each turn, the computer

randomly selects an action from the actions available.

How would an intelligent computer play tic-tac-toe?

Before we can program an intelligent opponent which makes this game more fun, we need to think about

how an intelligent computer would play tic-tac-tow.

Consider the following examples, where

you are playing as X and it's your turn. What action should you take and why?

Write your answers in notes.md.

| O | O | | O | X | X | O |

---+---+--- ---+---+--- ---+---+--- ---+---+---

X | X | | X | X | O | O | |

---+---+--- ---+---+--- ---+---+--- ---+---+---

| | | | O | | | | You probably used a similar thought process for each of these cases: My best action is the one where the opponent's best response is the least bad for me, or--even better--where I win right away. So if I know my opponent's best response to each move I might make, then I can figure out which of my moves is best.

How would my opponent figure out her best move? In the exact same way! If she can win right away, she'll do that. Otherwise, she'll think about my best response to each of her possible moves, and she'll choose the move where my best response is least bad for her.

This logic keeps going: I can plan out how I would respond to your response to my move... In order to solve a problem (which move I should make), we need to solve another similar problem (which move you should make). This structure is called recursion. If recursion keeps going forever, it's not useful for solving a problem. Fortunately, tic-tac-toe doesn't keep going forever. If I play out all the possibilities of a game, each will quickly reach a situation where one of us wins, or where the game ends without a winner. And then I can work backwards from there.

Let's formalize this a bit with some definitions:

- A game state is a snapshot of a moment in a possible game, including the board position and whose turn it is.

- An action is a player's move. Each action is a link between the current game state and a future game state.

- A reward is a number attached to each state, defining how good or bad it is to be in that state. Think of a reward as a number of points collected for visiting a state. In tic-tac-toe, you don't get points along the way; all that matters is what happens at the end of the game. We'll define the reward for states where X has won the game as 1 and the reward for states where O has won the game as -1. The reward for all other states (incomplete games and games which ended in draws) is 0.

- An objective is a definition of what players want in the game. In tic-tac-toe, X's objective is to get the highest rewards possible; O's objective is to get the lowest rewards possible. So X should try to make plays leading to end-states where X wins, and O should try to make plays leading to end-states where O wins.

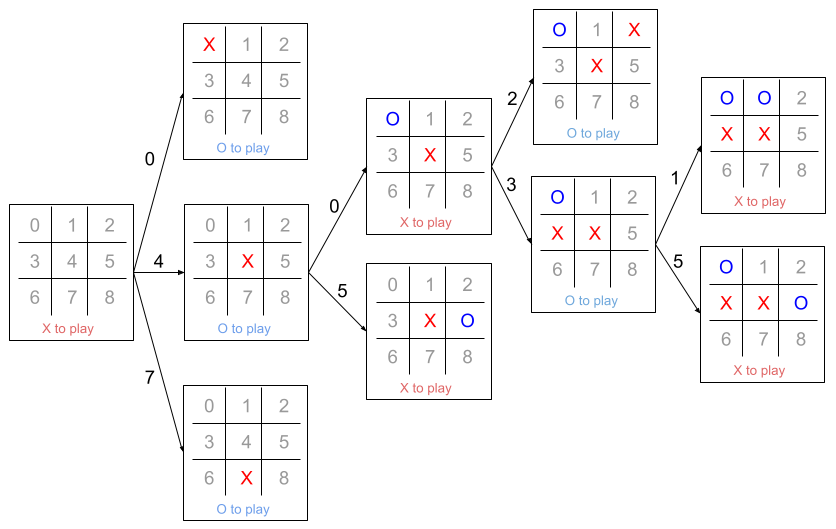

With these definitions, we can think of a tic tac toe game as a huge branching tree of possible future states. When it's X's turn, they choose an action which leads to another state. From there, O gets to choose the next action, leading to yet another state.

Now we can write pseudocode for how to choose the best action at any particular state:

- Get all the possible actions. For each one:

- Get the state which would result from that action.

- Add up the reward for the result state, including the rewards for all future states following that state.

- If the player is 'X', choose the action leading to the highest total reward.

- If the player is 'O', choose the action leading to the lowest total reward.

These functions are implemented for you in strategy.lookahead_strategy.LookaheadStrategy.

Load them into interactive Python and use them to calculate the value of each of the

game states shown above.

| O | O | | O | X | X | O |

---+---+--- ---+---+--- ---+---+--- ---+---+---

X | X | | X | X | O | O | |

---+---+--- ---+---+--- ---+---+--- ---+---+---

| | | | O | | | | As in TTTGame, a board is represented by a list of nine values.

The first state above can be represented as ['-', 'O', 'O', 'X', 'X', '-', '-', '-', '-'].

To find the value of the first state, enter the Python shell (python) and run the following:

>>> from ttt.game import TTTGame

>>> from strategy.lookahead_strategy import LookaheadStrategy

>>> game = TTTGame()

>>> strategy = LookaheadStrategy(game)

>>> state = {"board": ['-','O','O','X','X','-','-','-','-'], "player_x": True}

>>> strategy.get_current_and_future_reward(state)When you initialize LookaheadStrategy with the optional argument explain=True,

the program will print out every step in its reasoning.

For example, we can find the value of the first state by doing the following:

>>> from ttt.game import TTTGame

>>> from strategy.lookahead_strategy import LookaheadStrategy

>>> game = TTTGame()

>>> strategy = LookaheadStrategy(game, explain=True)

>>> state = {"board": ['-','O','O','X','X','-','-','-','-'], "player_x": True}

>>> strategy.get_current_and_future_reward(state, explain=True)You can get the inital game state using game.get_initial_state().

What is the current and future reward for this state? What does this mean?

Write your answer in notes.md.

Giving the computer player a better strategy

💻

Replace the computer player's RandomStrategy with a

LookaheadStrategy. Sometimes there are multiple moves which are equally good. If you

want the computer player to choose randomly from these, use the optional deterministic

argument:

self.strategy = LookaheadStrategy(TTTGame(), deterministic=False)Nim

Now it's your turn to write another game. The game of Nim can be played by two players, a sheet of paper, and a pencil. The game starts with four rows of lines, as shown here:

|

|||

|||||

|||||||On each player's turn, she must cross off one, two, or three lines--all from the same row. Whoever crosses off the last line loses the game.

View and player classes are provided for you in the nim directory, but the game class is not provided.

Instead, you have a nim.game_stub.NimGameStub class. A stub is a minimal version of a class which stands in for the

real class, which hasn't yet been written. The stub has all the correct

methods, and their inputs and outputs are the right kind of thing,

but it doesn't really do anything.

Therefore, play_nim.py works superficially, but the game mechanics do not work.

💻

Write a NimGame class and replace NimGameStub with your class to make the game work.

You should implement all the same methods as NimGameStub.

An intelligent Nim player

Here's a pleasant surprise: The exact same LookaheadStrategy which we used to make the TTTComputerPlayer intelligent

can also work for nim!

💻

Give the NimComputerPlayer an intelligent strategy.

The state tree for nim is really large, so the computer player will take a long time

to choose its move. You can limit how far ahead the nim player looks by using the optional max_depth argument.

Limiting the search depth trades off speed for lower-quality moves. See if you can find a good balance, where

the player plays well enough without taking too long to think about its moves.

self.strategy = LookaheadStrategy(TTTGame(), max_depth=2, deterministic=False)Why does the same strategy work in tic-tac-toe and nim? Would it work for other games too?